The basics of search engine optimization for a web developer: improving SEO with the help of code and common sense

In this article, we will discuss the technical details of the layout of web pages optimized for search engines, and to consolidate knowledge, we will create a small website according to all the rules and upload it to the server. This is not an ultimatum guide, but rather a cheat sheet on how to make it beautiful, functional and so that the landing page gets to the top of the output. The material will be useful for web developers who are just starting to engage in SEO. We invite experienced specialists to comment — share your life hacks and search engine optimization cases.

Briefly about SEO

SEO (search engine optimization, or search engine optimization) is the optimization of a website to increase its position in search results based on user queries.

When we make a request to Google or another search engine, it strives to show the most relevant pages. That is, the task of the search engine is to give us the most suitable of the many sites that mention keywords.

Search engines select pages based on a variety of factors and rank the results according to them. Obviously, the site owners want to be as high as possible in the search results. All search engines work in different ways: for the same request, Google may give different selection of pages.

The main factor that affects ranking is content. But at the same time, there are many technical details that SEO specialists are working on, optimizing sites for different search engines.

How do search engines collect information about web pages in order to further rank them by relevance? Imagine a website in the form of a web: each thread is a link that can be used to get from one page to another. Crawler spiders crawl along this web. They go to the pages, analyze them, study the content, titles, settings, and so on. The crawler went to the page, wrote something about it, saved this entry somewhere - he indexed the page.

As a result of such indexing, huge directories are formed in which search engines can quickly find the necessary information in order to then rank sites by relevance and give them to the user.

The role of semantics in SEO

To show SEO from the position of a web developer, we build a simple website.

1. Create a file index.html

We do basic things in it: specify the language, encoding, and viewport, which is responsible for displaying the page on different devices.

2. Specify meta tags

Title. This is the title of our page, one of the most important parameters. It is displayed on the search results page and on the tab with the page open. We put as many keywords as possible in the title. Its text should be conscious, and the meta tag itself should be unique on each page. Do not forget that the page title significantly affects its position in the search results.

<title>A book on jаvascript. Learning from scratch</title>Description. This is the description of the page. It can be anything, but it is worth adding as many keywords as possible. The description is located under the title in the search results.

<meta name="description" content="This book will teach you jаvascript programming from scratch....">Keywords. There is a lot of debate around keywords. Someone assures that they no longer affect the promotion, and someone says that it is better to write them. We'll point out a few words just in case.

<meta name="keywords" content="JS, jаvascript, Learning JS">3. Making semantic layout

The search robots that will parse our page will definitely take into account its semantics. Nothing prevents us from setting up the site on div blocks, hanging the necessary classes, anchors like onclick and so on - it will work and look beautiful. However, from the point of view of usability, accessibility and semantics, this is incorrect.

There is a so-called semantic layout, when semantically correct tags are used for each block of the page (section, header, article, navigation, footer). For example, if you are using a button, then let it not be a div or span with the button class, but directly the button tag. Then, on the default page, the button will work not only by clicking the mouse, but also by pressing the Enter key. By the way, the accessibility of a page for people with disabilities also affects its ranking.

The same applies to the rest of the HTML elements. We use the appropriate tags for links (and for external links — target="_blank" so that the link opens in a new window), lists, subheadings and other things. Once again: from the point of view of appearance, the same subtitle can be made using a div block with the header tag - the user will perceive such an element precisely as a title or subtitle. But in terms of semantics (and, as a result, SEO) this is not the optimal approach. By the way, we also write the main text of the page not in div blocks, but in paragraphs.

But pages that are closed to users, for example with an admin panel, do not need SEO and should not even be indexed by search engines (next we'll figure out how to disable indexing of individual pages). On such pages, you can quickly make up everything in div blocks.

Open Graph and beautiful links

Open Graph, or OG, is a markup protocol that allows you to make links attractive and understandable. It was invented by Facebook developers, and now Open Graph support is available in almost all social networks.

For example, a link without an Open Graph in social networks will just be clickable text. And the link from the Open Graph is sent along with the title, description and picture:

This is necessary not just for beauty: although the use of Open Graph does not directly affect the search results, it improves the display of links in social networks and gives the user additional information about the page to which the link leads.

We start adding an Open Graph by filling in meta tags. There are five main ones: type, url, title, description and image. In the code, it all looks like this:

<meta property="og:type" content="website">

<<meta property="og:url" content="http://ya.ru/">

<meta property="og:title" content="This is an og description">

<meta property="og:description" content="this is an og description">

<meta property="og:image" content="https://9990/s3/multimedia-d/wc1000/6029228593.jpg ">If you want to see the OG markup on any site, go to the page, select the View Code option in the context menu, and type "og" in the search on the page. On large sites, especially marketplaces, you will definitely find the tags og: title, og:description, og:image, og:url and more. It can be interesting to go to different sites and see how the tags are filled in there: sometimes you can extract something useful for yourself.

As a result, our simple HTML will look like this:

<!doctype html>

<html lang="ru">

<head>

<meta charset="UTF-8">

<meta name="viewport"

content="width=device-width, user-scalable=no, initial-scale=1.0, maximum-scale=1.0, minimum-scale=1.0">

<meta http-equiv="X-UA-Compatible" content="ie=edge">

<title>A book on jаvascript. Learning from scratch</title>

<meta name="description" content="This book will teach you jаvascript programming from scratch....">

<meta name="keywords" content="JS, jаvascript, Learning JS">

<meta property="og:type" content="website">

<meta property="og:url" content="https://898989">

<meta property="og:title" content="This is an og description">

<meta property="og:description" content="this is an og description">

<meta property="og:image" content="https://i909090/s3/multimedia-d/wc1000/6029228593.jpg ">

<!-- <meta name="robots" content="index">-->

<!-- <meta name="robots" content="index, nofollow">-->

<!-- <meta name="robots" content="noindex">-->

<!-- <meta name="robots" content="follow">-->

<!--<meta name="robots" content="nofollow">-->

<!-- <meta name="robots" content="all">-->

<!-- <meta name="googlebot" content="index">-->

<!-- <meta name="yandex" content="noindex">-->

</head>

<body>

<img src="" alt="need to fill in" srcset="400x400.png 500w, 700x700.png 800w">

<h1>

Hello world

</h1>

<!--<h2></h2>-->

<!--<h3></h3> exactly the headers-->

<!--<p class="header5">-->

<!--</p>-->

<!--<ul>-->

<!-- <li>1</li>-->

<!-- <li>2</li>-->

<!-- <li>3</li>-->

<!--</ul>-->

<!--<header>-->

<!-- <div class="button"></div>-->

<!-- <button></button>-->

<!--</header>-->

<!--<article></article>-->

<!--<section></section>-->

<!--<nav></nav>-->

<!--<footer></footer>-->

<!--<a href="ya.ru" target="_blank"></a>-->

<!--<p>text in paragraphs</p>-->

</body>

</html>Working with links in the context of SEO

Human-readable links

When you see the link cars.qq/auto/123/model/456, what can you assume about the content of the page it leads to? It's obvious from the domain that it's something about machines, and that's it. And when they send you the link cars.qq/auto/bmw/model/m5, you immediately realize that it leads to a page related to the BMW M5: this is some kind of article or an announcement about the sale of a car. This url is understandable to a person, there are no identifiers or incomprehensible numbers.

Here are a couple more examples from the Internet. The links on the left are uninformative, including index.php , category and numbers. The links on the right are meaningful and easy to read. By the way, the keywords in the links also help in ranking the page.

the numeric identifier in the links is quite appropriate. For example, it will definitely be in the link to the page of the announcement site. But at the same time, the first part of the link should still be "human-understandable", and only in the second half of it, which almost does not affect the ranking, a unique identifier with a set of numbers and letters will appear. The "human intelligibility" of links should not be neglected if you want to display your pages in the top search results.

Canonical links

Let's imagine that we have two links that lead to the same page: the original direct url and the one that was formed when working with categories or filters. It turns out that we have a duplicate link, which is bad. In fact, this is junk content that spends the crowling budget and competes with the source link when indexed by search engines.

To avoid this, specify the canonical link — only it will be indexed, and the rest will no longer be perceived as duplicates. To do this, go to the header block of the HTML document and specify the link in which rel="canonical", and place a direct link to the page in the href. This is how it looks in the code from the page

<link data-vue-meta="ssr" href="https://900999090898.com/companies/selectel/articles/788694/" rel="canonical" data-vmid="canonical">To ensure that canonical links always have a unified appearance, basic redirects are used. They can be configured at the Nginx config level. Redirects automatically redirect users from a page with www in the address to a page without www, from http to https (http should not appear anywhere at all), from pages without / at the end of the address to pages with / at the end.

Other important SEO Features

Now let's look at some important nuances that will help you fine-tune page indexing, as well as improve the user experience.

Control of search engines

The robots meta tag

When indexing, search robots operate according to certain algorithms, which do not always suit us. We can prohibit robots from doing certain things.

In the HTML document, specify the meta tag:

<meta name="robots" content="index">The content of its content part determines the actions of the robot. The index entry is the default value, and the robot will index the page with it. If you specify "noindex", the page will not be indexed.

It also works with other constant values:

follow allows the robot to follow links, nofollow prohibits;

archive — show the link to the saved copy in the search results, noarchive — do not show;

noyaca — do not use an automatically generated description;

all — use the index and follow directives;

none — use the noindex and nofollow directives.

Using the robots meta tag, you can prohibit indexing a page in Yandex, but keep indexing for Google:

<meta name="googlebot" content="index">Permissive directives take precedence over forbidding ones: if you do not specify the noindex meta tag, the page and links will be indexed by all search engines by default. Meta tags are only needed when we want to ban something.

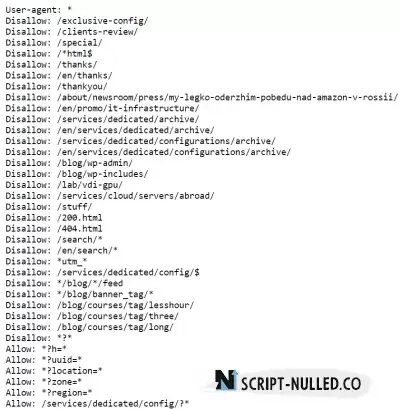

The file robots.txt

This file is needed to allow or prohibit indexing of sections or pages of the site for different robots. We want to exclude some page of the site from indexing for all search engines - no problem. The same thing if we want to make some page indexed by Yandex, but not indexed by Google.

That's how we prohibit the Google search engine from indexing a page with contacts:

User-agent: Googlebot

disallow: /contactsOf the important things: this file must be located in the root of the project so that the robot can reach it, and be no more than 500 KB.

View the contents of the file robots.txt for any website, it's simple: just add to the link to the main page robots.txt after /

A sitemap, or site map, is an XML document that indicates the current structure of the site with all links and the dates when these links were changed. Here, four properties can be set for each page: the address of the page itself (loc tag), the date of the last update (lastmod), the frequency of page changes (changefreq) and priority. For example, if you want some pages to be indexed better than others, you can set the indexing priority for each of them from 0 to 1 in increments of 0.1.

The sitemap is built from canonical links and has a size limit of 50 MB. If there are so many pages and links that 50 MB is not enough, or you want to create a large tree structure, then you can place links to other sitemap files inside the base sitemap. To let the indexer know that you have this site map, specify in robots.txt a link to sitemapindex.xml (as in the screenshot above).

It is important to understand that a sitemap is not needed if the site contains only a couple dozen pages. This is necessary only for sites with a large complex structure of links and pages, when it is required to reliably index the necessary canonical links and exclude some pages from indexing. A huge XML file is not filled with hands. This is done automatically: using a script or a generator.

Mobile device support

There is such a technology — AMP, or Accelerated Mobile Pages. Simply put, it allows you to develop fast versions of pages for mobile devices. At the same time, Google ranks such pages better.

Naturally, this technology, as well as website optimization for mobile devices in general, is not needed by everyone. For example, Habr is obviously tailored for desktop users, but on the contrary, almost everyone comes to some marketplace or website with ads from smartphones.

You can read exactly how AMP works in the documentation. In the context of SEO, the most important thing is that Google prefers fast AMP pages when indexing and ranking results. He identifies them by special tags that are added during development.

Micro-markup

Micro—markup from the user's point of view is additional information under the link in the search results. Product prices, movie or theater showtimes, bread crumbs, and so on can be displayed here. In all cases, the point of micro-markup is to give the user additional useful information right on the search results page.

Micro-markup is written quite easily, detailed and understandable instructions are here. Thanks to the micro-markup, the most important information for the user can be included in the search results: contacts, schedules, prices, etc. You can use the validator to make sure that the markup is done correctly.

Checking search engine optimization

Metrics

There are three most important metrics from a technical point of view.

LCP (Largest Contentful Paint) — the time it took for the largest visible element of the page to load. A good indicator is no more than 4 seconds, an excellent one is no more than 2.5 seconds. Obviously, if the entire bundle and frontend weigh 200 KB, they will load quickly. If the bundle is not optimized, weighs 5 MB and everything is loaded in one solid piece, it may take 10 seconds to load the page, and even more in the case of unstable Internet.

FID (First Input Delay) — the time until the first interactivity. In other words, this is the time from the moment the page loads until the user has the opportunity to interact with it: enter text, click buttons, click links, and so on. If the FID fits in 0.3 seconds, everything is fine. If not, the site is worth working on. The FID is significantly smaller than the LCP, because some page elements may load slowly or asynchronously and, until they load, the user can already interact with other elements.

CLS (Cumulative Layout Shift) is a metric that is responsible for visual stability. Have you ever come across a situation where you want to press a button, and then "Bang!" - another block is loaded somewhere from above, the button moves down, and you click on something wrong? This is an example of a visually unstable page, and this, of course, should not be the case. Even if the page elements are loaded asynchronously, there should be a stub instead of them before they are fully loaded, so that the layout does not move out and users do not feel discomfort. CLS is measured in points, the indicator should not exceed 0.25.

It is convenient to use Google's Page Speed Insights service to measure metrics. Of course, in addition to LCP, FID and CLS, you can see a huge number of other parameters related to performance, availability, security, and so on. It is convenient that you can measure the parameters for both the desktop and mobile versions of the site.

The browser has a similar built—in tool - Lighthouse, on the basis of which Page Speed Insights is made. The principle of operation is simple: go to the desired page, press F12 and select the Lighthouse tab. We set the necessary settings, click Analyze page load, wait and get the result of the analysis. In general, everything is almost the same as in Page Speed Insights, although the indicators may vary slightly by individual metrics.

HTML validity

Sites with invalid HTML code are much worse indexed by search engines, load slower, and sometimes even declared dangerous. You can check the validity using the classic W3 validator, in which it is enough to specify the address of our page.

In our case, the HTML document is small and checking it is not even as interesting as a full-fledged website. The validator issues one warning per viewport, and that's it. In reality, when it comes to a real big site, there can be a lot of mistakes and they are often unnoticeable. For example, an unclosed div is a very popular story that does not affect the user experience, but negatively affects the indexing of the site by search engines.

Validators do a good job of finding errors in the layout, so they should not be neglected. The more errors go unnoticed, the more suspicious the search engines will consider the page, lowering its priority in the output.

Crowding budget

The number of pages that the search robot can crawl is limited. At the same time, almost any site has pages that should not be indexed at all: admin sections, duplicates, and so on. They should be excluded from indexing in order to increase the other pages of the site in the ranking of search results.

It is important to understand that it is worth doing this work when you are fighting for tenths and hundredths of a percent of SEO. This is relevant, for example, for giant marketplaces. If you have a small website, it is better to postpone the optimization of the crowling budget and deal with more pressing issues: improve the quality of content, work with keywords, configure Open Graph, and so on.

Spain

Spain

Portugal

Portugal